Our Vision: AI for Smarter Orthopaedic Care

AI and ML approaches to orthopaedic surgery

At the intersection of orthopaedic surgery and artificial intelligence, our research is focused on building real-world tools that enhance clinical decision-making, streamline diagnostics, and expand research capability. Our goal is to translate cutting-edge machine learning techniques into practical solutions that improve care across diverse settings—from high-resource hospitals to community clinics.

Two key pillars define our AI innovation strategy:

1. Computer Vision Models for Radiographic Intelligence

We are developing computer vision models that aim to bring radiographic intelligence to the front lines of orthopaedic care starting with a simple but powerful idea: What if an iPhone photo of an X-ray could provide immediate clinical insight? Through OrthoView AI, we are building models that classify anatomical region, view type, and basic diagnoses (e.g., fracture, arthritis, tumor) using a combination of public datasets, hospital images, and even screenshots. These tools are not designed to replace radiologists or orthopaedic surgeons—they're designed to support decision-making where it’s needed most: urgent care clinics, rural hospitals, and early-stage training environments. Our goal is a lightweight, cloud-based system that delivers fast, actionable insights from plain X-rays—ultimately enabling triage, referrals, and education in settings where orthopaedic expertise is limited or delayed.

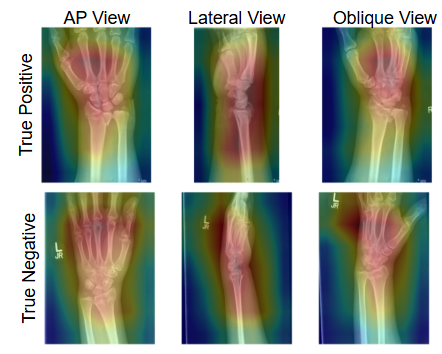

We have already developed proof-of-concept models focused on distal radius fractures, demonstrating that deep learning can differentiate between cases requiring surgical reduction and those amenable to non-operative care. Building on this success, we are now working to assemble a more comprehensive and diverse dataset of orthopaedic radiographs—drawing from both publicly available sources and locally collected clinical images across all major anatomical regions. This work is being conducted under Baylor College of Medicine IRB #H-55520, and is foundational to our goal of building a generalizable, accessible AI system for real-world clinical support.

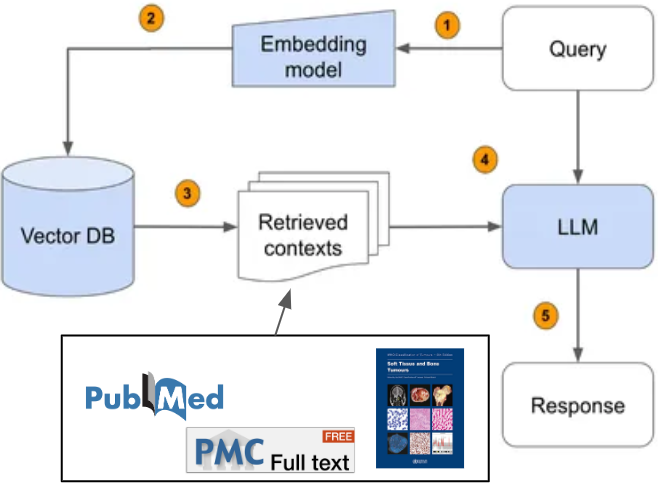

2. Retrieval-Augmented Generation (RAG) for Orthopaedic Reasoning

We are building a next-generation Retrieval-Augmented Generation (RAG) platform tailored to the unique demands of orthopaedic surgery. Our system combines real-time medical evidence with domain-specific reasoning to deliver context-aware answers that support both clinical decision-making and patient education. Unlike general-purpose AI tools, our models are grounded in orthopaedic structure—incorporating terminology, imaging logic, and procedural nuance to generate meaningful insights from the literature. Our initial work has focused on RAG-enhanced research idea generation and pathology report interpretation, and we are now expanding toward a modular platform that can synthesize evidence into clinical recommendations, treatment pathways, and patient-friendly summaries. Designed with future agentic capabilities in mind, this platform lays the foundation for intelligent orthopaedic assistants that are fast, evidence-grounded, and aligned with how surgeons think.

Research Projects

Here’s a snapshot of current and recent projects across our research domains:

Unsupervised Learning & Traditional ML

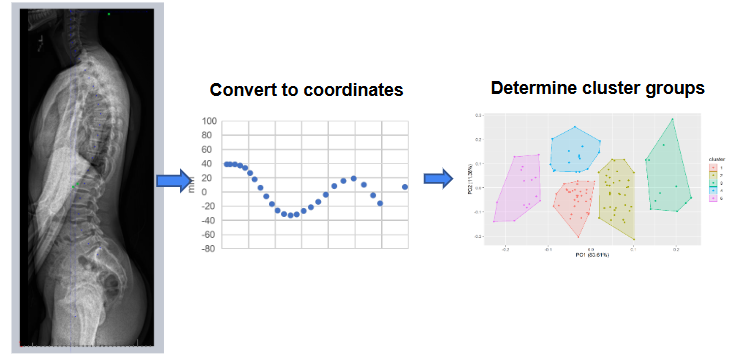

- Spine Alignment Clustering (Birhiray et al.): Identified distinct subtypes of sagittal spine balance in adolescents using unsupervised learning.

- Lumbar Fusion Risk Stratification (Noorkbakhsh et al.): Applied clustering methods to identify complication-prone patient subtypes after spine surgery.

- Spine Research Topic Mapping: Mined and clustered 10 years of spine abstracts to visualize evolving research trends.

Computer Vision Projects

- Fracture Classification (Scioscia et al.): Developed models predicting need for reduction or surgery in distal radius fractures.

- X-ray View Labeling (Vemu et al.): Automated classification of radiographic views for image set labeling and preprocessing.

- HRQOL Prediction (Ani, Kita, Moriguchi et al.): Modeled patient-reported outcomes from spinal deformity radiographs.

LLM + NLP Projects

- Research Idea Generation (Noorkbakhsh et al.): RAG pipeline compared AI- and human-generated research topics.

- Pathology Report Parsing (Cione et al.): Extracted relative incidence of sarcoma subtypes using GPT-based models.

AI in Healthcare Course (First Term 2025-2026)

- We are excited to launch a new elective at Baylor College of Medicine titled “From Code to Care: AI & ML for Health Care Applications.” This course is designed to introduce medical students to the fundamentals of artificial intelligence and machine learning, with hands-on experience in Python coding and real-world healthcare data. Over 11 weeks, students will explore everything from basic programming to building predictive models and evaluating the ethical implications of AI in clinical workflows. Whether you're interested in diagnostics, research, or future clinical integration, the course provides a foundational skill set for the next generation of physician-innovators.

You can review the course syllabus here: MESUR Elective Course Materials

Course Contact: Rohan Vemu